🌍2025 Wrapped: Presenting to the IUCN and Other Highlights

It's nearing the end of the year, and as things are slowing down ahead of the holidays, I thought I'd write a short wrap-up post to reflect on the past few weeks, and the term as a whole.

I've split this up into four sections: AI for the Red List Exam, Flora Explorer, Other Highlights, and Looking Forward.

AI for the Red List Assessor Exam: Presenting to the IUCN

One of the projects I've been most excited about this past term has been whether we can use AI to Accelerate Assessments for the IUCN Red List. There was an exciting recent development on this last week – I presented my work on AI for the Red List Assessor Exam to the IUCN last Thursday, and they're keen to see if we can collaborate in the new year!

How did this meeting even come about? Anil shared on his blog a link to my post on Can Claude Code Pass the Red List Assessor Exam?.

A friend of Anil's, Carly Batist from Conservation International, came across this and forwarded it to Neil Cox, who leads the Biodiversity Assessment Unit at the IUCN. Neil reached out to Anil saying these are really interesting posts, and asked if we could discuss it in the new year. I'm very grateful to Anil, who proactively asked to meet sooner if possible, to give us time to reflect on research ideas ahead of the break. Suddenly we had a meeting lined up with many other important people at the IUCN, including Craig Hilton-Taylor (Head of the Red List Unit), Caroline Pollock (Senior Programme Coordinator for the Red List Unit), Simon Tarr (Red List Data Manager), Miguel Torres (Red List Systems Manager) and Richard Jenkins (Head of Biodiversity Assessment and Knowledge Team).

On the day it went really well, and it looks like the IUCN would be very open to working together to take this forward. We had some great discussions, and everyone was super enthusiastic on the whole (maybe with the exception of Caroline, who designed the exam and training course 10 years ago – turns out she'd been stumped as to how someone was solving the exams so quickly, and messing up all her reporting! We all had a good laugh about that.). Here's a rough script for what I presented, and here's a video recording of me doing a re-run of the presentation to my parents later than evening (so they now finally understand what I've been working on!).

In the meeting, I also presented the dashboard I built recently to help visualise assessment coverage and prioritisation, which they were also really supportive about.

We identified three useful ways AI assistance could fit in: (1) using AI to help validate new draft assessments and re-assessments, (2) maintaining a 'living Red List' with a dynamic evidence base for each species that is automatically kept up-to-date, and (3) producing a prioritisation order for expert assessors for new assessments and re-assessments. It turns out that many of these ideas had been discussed in an IUCN workshop in Cambridge in June earlier this year, and so there is strong appetite to continue this momentum.

In terms of next steps, there are two key directions I'll be looking to explore in the new year, towards the ultimate vision of an AI-assisted 'living' Red List evidence base:

-

Benchmarks – this seems like the key starting point, defining the success criteria for AI-assistance in various aspects of the Red List workflow. It could build on top of the Red List Assessor Exam framework (bolstered by synthetic questions perhaps), and we could progressively add complexity to better reflect the real-world Red List assessment process. Such complexity will make this a valuable benchmark for agentic capabilities more generally – since Red List assessments involve making important real-world judgments via complex reasoning amidst ambiguity, uncertainty, diverse modalities and incomplete information.

We're in a unique position to work closely with actual Red List assessors to design these collaboratively (Richard and Simon both strongly encouraged me to come spend lots of time chatting to assessors in the DAB, which I'd love!). Moreover, due to the Red List's biannual updates, we have a periodically re-occurring held-out test-set that will be outside the training cut-off dates as new frontier models get trained (e.g. the 2026-1 Red List update will be released early next year). I'm eager to look more into Ai2's AstaBench for inspiration here, as well as OpenAI's new FrontierScience release. Benchmarks are also an amazing way to get the machine learning community working together to tackle a problem.

-

Agentic search with literature – this seems like the logical and super valuable next addition to the dashboard, highlighting relevant literature sources that were unused in the previous assessments. Building for the future definitely involves connecting AI with skills to large databases like OpenAlex in ClickHouse, and we're still figuring out how best to set this up.

An important thing to keep in mind again here that I'm still wrapping my head around is balancing between what is research and what is product-engineering in my work, and being very intentional about this.

But on the whole I'm feeling highly motivated – collaborating with the IUCN to improve the Red List feels like a huge opportunity to have a positive impact on the planet through my research. It's an incredible position to be in – doing cutting-edge AI research at Cambridge, in collaboration with the world's largest environmental organisation, to support one of the most important information sources in global biodiversity conservation.

I'm looking forward to reflecting more on all of this over the break, and then taking this forward in the new year.

Flora Explorer: Can We Identify Plants From Space?

A brief word here on another project I took a brief look at, but have parked for now. The main idea here is trying to benchmark where we're at for using Tessera for fine-grained plant species identification from space. Having a dynamic 'tree species heatmap' is a tool I've wanted myself whilst wandering around Cambridge – even having a soft prior on what the trees around me are would be super interesting as I'm trying to learn more about trees.

The research questions here can be expressed as: are there some plant species we can accurately identify from space using foundation model embeddings? Which ones if so? What about fungi? (the Red List is aiming to assess 20k fungi species by 2030, but has only done 1k as yet) How does the GPS uncertainty in the underlying GBIF labels fit in? Moreover, how many training samples would we need per species to attain a given level of accuracy, and does this number depend on species' taxonomy or trait data? Could techniques like generalized category discovery help with tackling the 'long-tail' problem with plant data? And how will all this change once we get p-band radar?

If you're interested in more details, you can check out a Claude-generated write-up for some of the things I've tried so far. My key takeaway thus far is: honest-reporting of accuracy results with geospatial machine learning is not as straightforward as I'd first thought! I learned that techniques like spatial thinning and spatial blocking are essential to protect against spatial auto-correlation effects.

I'm definitely keen to continue to look into this in the new year, as I still want answers to the questions spelled out above. However, it's probably wise for this to just be a side project for me, to make sure I'm not spread too thin and protect my primary focus on the AI for the Red List project for now.

Other Highlights

I'll end by sharing some pics from various notable and enjoyable extra-curricular items during the past term.

1. Weekly EEG Seminar Meetings and the EEG Christmas Dinner

It's been so great meeting the wonderful people in the EEG and learning the interesting things that each person is working on. The EEG seminar also provided a safe space for me to demo my AI for the Red List Exam work for the first time, and gave me great initial feedback (many thanks to Keshav, Robin and Jon for thought-provoking questions!).

2. EurIPS in Copenhagen with Sadiq, Frank and Yihang, attending the AI for Conservation conference.

I had a bunch of new ideas spark from the conference, and really enjoyed meeting other people passionate about this space. In a fantastic coincidence, on the flight down I was sat next to Drew Purves (Nature Lead at Google DeepMind) who was attending as one of the keynote speakers – and we had a great 2-hour conversation sharing excited ideas about how AI could help nature. I also loved spending time with Yihang and Frank exploring Copenhagen, learning about life back in China, and being treated to a delicious Cantonese restaurant!

3. ARIA workshops: Foundational AI to forecast ecosystem resilience and Next Generation Ecosystem Models.

Many fantastic forward-looking ideas and discussions flowing, and I met many very impressive people, including Julia Jones, Robin Freeman, Mike Harfoot, Oisin Mac Aodha, Calum Maney and Drew Purves, amongst many others.

4. Football for the JCFC 1s

Whilst we sadly weren't able to retain our Cuppers title, it's been great fun.

5. Rowing for JCBC NM3s

I'm certainly not a natural rower (and am repeatedly told I need to stretch more), but it's been good to try something new!

6. Re-uniting with South African friends in Cambridge

I'm grateful to have had some close friends from home already here in Cambridge when I arrived – it made settling back into Cambridge a much easier transition.

(As an aside: it's an incredible time to be a South African sports fan, with the Springboks rugby team's continued success, our cricket teams entering a new era, and Bafana Bafana qualifying for the 2026 FIFA World Cup in the US – our first time qualifying since my very fond memories of us hosting it back in 2010.

7. Meditation Nights at the Cambridge Buddhist Centre

There's a lovely, warm Buddhist community here in Cambridge. The regular community-centred Thursday night meditation nights have provided a lovely counter-balance to the very strict and intense 10-day Vipassana retreat I undertook in the Blue Mountains outside Sydney at the start of the year.

8. Lastly, I took Ballroom Dancing classes for the first time (no pictures sadly, I'll rectify this next year!).

As a famously bad dancer, it was great to learn some set moves from the Waltz, Cha-Cha, Jive, Tango and Quickstep.

Looking Forward

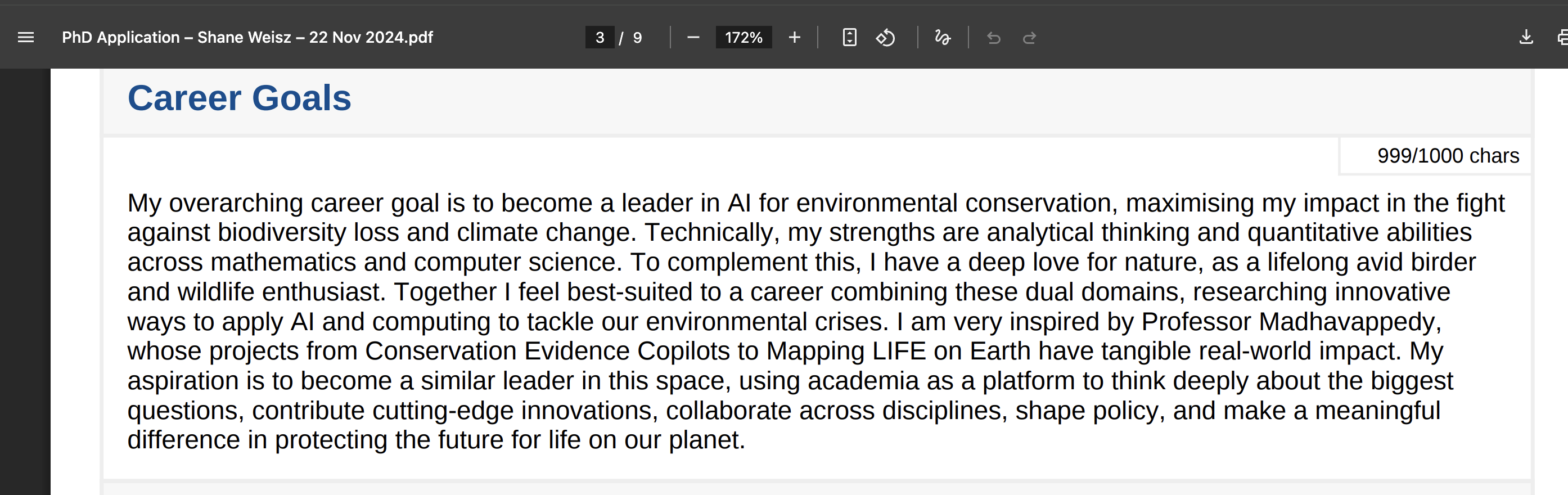

I'm feeling very grateful to be here doing my PhD in Cambridge. I recently took a look at the career goals I wrote in my PhD application over a year ago, and it's very satisfying to reflect on living true to those aspirations.

It's a real privilege to be here, with the freedom to think deeply about solving important global problems, researching ground-breaking AI technology, with a supportive, patient and inspiring supervisor who I'm learning so much from (I still don't quite believe Anil has the same 24 hours in a day as the rest of us), surrounded by brilliant minds in the EEG and CCI who care deeply about making a difference in the world. I'm left just feeling incredibly excited for 2026.

See you in the new year, and happy holidays everyone!